Docker

An Introduction

In the past, transitioning a software application from code to production was a painfully slow process. Developers often struggled with the tedious task of managing dependencies while setting up their test and production environments. Docker, a type of container technology, emerged as a solution to alleviate this pain.

From Bare Metal to Docker

- Bare Metal: The applications ran directly on the physical server (Bare Metal). Teams purchased, racked, stacked, powered on, and configured every new machine.

- Hardware visualization: multiple virtual machines to run on a single powerful physical server.

- Infrastructure-as-a-service (IaaS): IaaS removed the need to set up physical hardware and provided on-demand virtual resources. (like Amazon EC2)

- Platform-as-a-service (PaaS): PaaS provides a managed development platform to simplify deployment. However, inconsistency across environments is another issue.

- Docker: the concept of containerization.

Innovations of Docker

Docker improved upon PaaS through two key innovations.

Lightweight Containerization

Container technology is often compared to virtual machines. A VM hypervisor emulates underlying server hardware such as CPU, memory, and disk, to allow multiple virtual machines to share the same physical resources. In contrast, Docker containers share the host operating system kernel. The Docker engine does not virtualize OS resources.

Containers achieve isolation through Linux namespaces and control groups (cgroups). Namespaces provide separation of processes, networking, mounts, and other resources. cgroups limit and meter usage of resources like CPU, memory, and disk I/O for containers.

As a result, containers are more lightweight and portable than VMs. Multiple containers can share a host and its resources. They also start much faster since there is no bootup of a full VM OS.

Docker is not lightweight virtualization. It uses Linux primitives to isolate processes, not virtualize hardware like a hypervisor.

Application Packaging

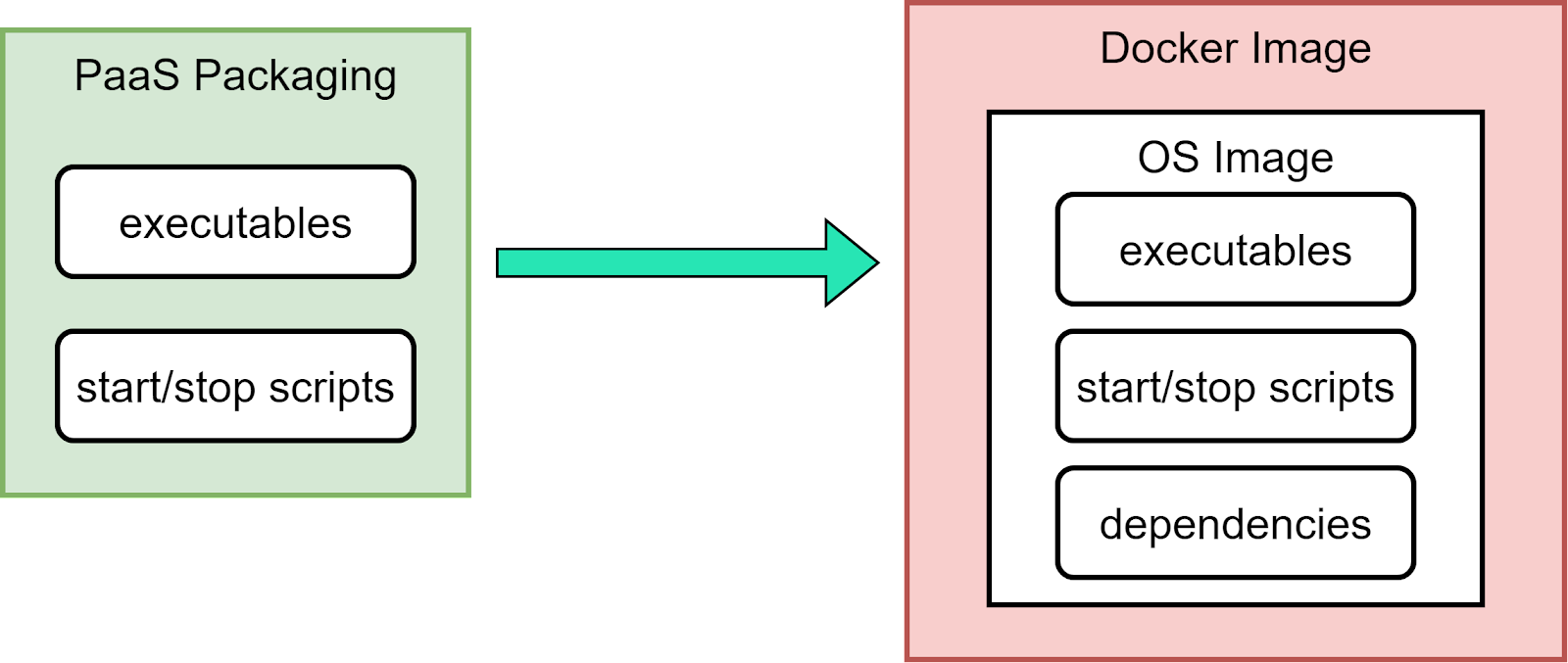

Docker solved two key PaaS packaging problems with container images:

- Bundling the app, configs, dependencies, and OS into a single deployable image

- Keeping the local development environment consistent with the cloud runtime environment

Here is a figure shows the comparison. (Borrowed from A Crash Course in Docker)

Image and Runtime

A container image bundles up code, dependencies, configs, and a root filesystem into a single static artifact. It is like a compiled executable, containing everything needed to run the application code in an isolated environment. The Docker engine unpacks this image and starts a container process to run the application in an isolated namespace, like a sandboxed process on Linux.

The image provides a static view of the application to be run. The container runtime gives a dynamic instance of the image executing. The image is the build artifact, while the runtime provides an isolated environment to launch it.

The bottom layers of the image provide the base OS and dependencies. The top layer adds in the application code and configs. When started by the container runtime, the image creates an isolated process sandbox to run dynamically.

This separation of static image and dynamic runtime solves dependency issues in PaaS. The image acts as a consistent packaging format from dev to test to prod. The OS-level isolation ensures portability across environments.

Cgroups, Namespace, and rootfs

- Namespace - A container is a special type of process

- Cgroups - Limit container resource usage

- rootfs - The container filesystem

After installed Docker

Start Docker

sudo systemctl start docker

Make Docker always run after reboot the machine

sudo systemctl enable docker

Here are some commands that you may need to fix potential problems or check status.

Reload daemon

sudo systemctl daemon-reload

Restart Docker

sudo systemctl restart docker

Check Docker status

sudo systemctl status docker

Docker commands

Image

Image is a template for creating Docker environment.

# Search images: search nginx image in docker hub

docker search nginx

# Download images:

# download nginx image from docker hub

docker pull nginx

# install specific version

docker pull nginx:latest

docker pull nginx:1.26.0

# Check image list

docker images

# Remove (delete) image

docker rmi

# Remove all docker images

docker image prune -a

Container

# run docker

docker run

# run a pytorch docker with name mypytorch in the background

docker run -d --name mypytorch pytorch/pytorch

# publish a container's port to the host. 88 is host port, 80 is container port.

# 88 should be unique because it's port on host

docker run -p 88:80

# run a docker with all gpus, keep STDIN open (-i) and allocate a pseudo-TTY (-t)

docker run -t -i -d --gpus all --name mypytorch pytorch/pytorch

# check docker list

# show containers that are running

docker ps

# show all containers including those are not running

docker ps -a

# stop docker

docker stop

# stop a docker with name mypytorch

docker stop mypytorch

# start docker

docker start

# start docker with CONTAINER ID 4056

docker start 4056

# reboot docker

docker restart

# check docker status

docker stats

# check docker log

docker logs

# delete docker

docker rm

# delete all the dockers

docker rm -f $(docker ps -aq)

# access docker

docker exec

# access docker with name mypytorch with a terminal

docker exec -it mypytorch /bin/bash

# access docker with CONTAINER ID 4056 with a terminal

docker exec -it 4056 /bin/bash

Note: the docker exec -it command:

The option -it means creating an interactive session with a pseudo-TTY in the Docker container (set up a terminal within the docker). It combines 2 flags, -i and -t.

- ’-i’ or ‘–interative’: This flag keeps the standard input (STDIN) open for the command you are running in the container.

- ’-t’ or ‘–tty’: This flag allocates a pseudo-TTY (pseudo-teletypewriter). It simulates a terminal.

Save container

# commit

docker commit

# commit a image with name mypytorch with a message with repository name mypytorch and tag v1.0

docker commit -m "init commit" mypytorch mypytorch:v1.0

# save

docker save

# save image repository mypytorch with tag v1.0, output a tar file

docker save -o mypytorch.tar mypytorch:v1.0

# load

docker load

# load tar file mypytorch.tar

docker load -i mypytorch.tar

Share image

# login to docker hub

docker login

# add tag to a image

docker tag mypytorch:v1.0 username/newname:v1.0

# push image

docker push username/newname:v1.0

# remember always add a latest version

docker tag mypytorch:v1.0 username/newname:latest

docker push username/newname:latest

Docker storage

Volume

If you save your data on docker directly, there will be risk of being deleted by removing the docker. In this case, it’s better to store the data permanently in a volume. It could be considered as a directory or a file.

# volume list

docker volume ls

# create volume

docker volume create volumename

# inspect info of a certain volume

docker volume inspect volumename

# run a docker with volume (must be absolute path)

docker run -it -v /host/abs/path:/path/in/docker imagename

# trick

# can solve permission deny problem

docker run --privileged=ture

# quick example: bind mount

sudo docker run -it -d --gpus all --name RL_reasoning --privileged=true -v /path/to/project:/workspace/project pytorch/pytorch

FAQ

Docker extensions of VS Code is not working.

While running docker command, the command line shows: Cannot connect to the Docker daemon at unix:///path/to/.docker/desktop/docker.sock. Is the docker daemon running?

The problem is that the docker.sock file is in the path: unix:///var/run/docker.sock. If the path is not correct, the error will show up.

Solution:

Create the symbolic link:

ln -s /var/run/docker.sock /path/to/.docker/desktop/docker.sock

Restart docker service:

sudo systemctl restart docker

GPU driver fails to load

Before running with GPUs, you may need to do:

distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add -

curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

sudo apt-get update

sudo apt-get install -y nvidia-docker2

sudo systemctl restart docker

Reference

Docker Tutorial by Hanzhi Zhang: setup docker DL env

尚硅谷3小时速通Docker教程 (Courses are delivered in Chinese)